When it comes to 3rd party relationships, sometimes you don’t know what you don’t know. But turning a blind eye isn’t an option. There are significant risks when 3rd party relationships are not approached thoughtfully by the operators of your business. For example, are your employees providing them with PII data? Are your employees being given user IDs and passwords to log into the 3rd party websites? Is that log in information secured and is access to the sites removed when employees transfer or are terminated? Those are just a few of the many risks that can exist.

Surveying employees and asking them to identify 3rd party relationships is one approach but chances are it may not give you a complete picture. An independent approach to identification is a good way to complement taking surveys.

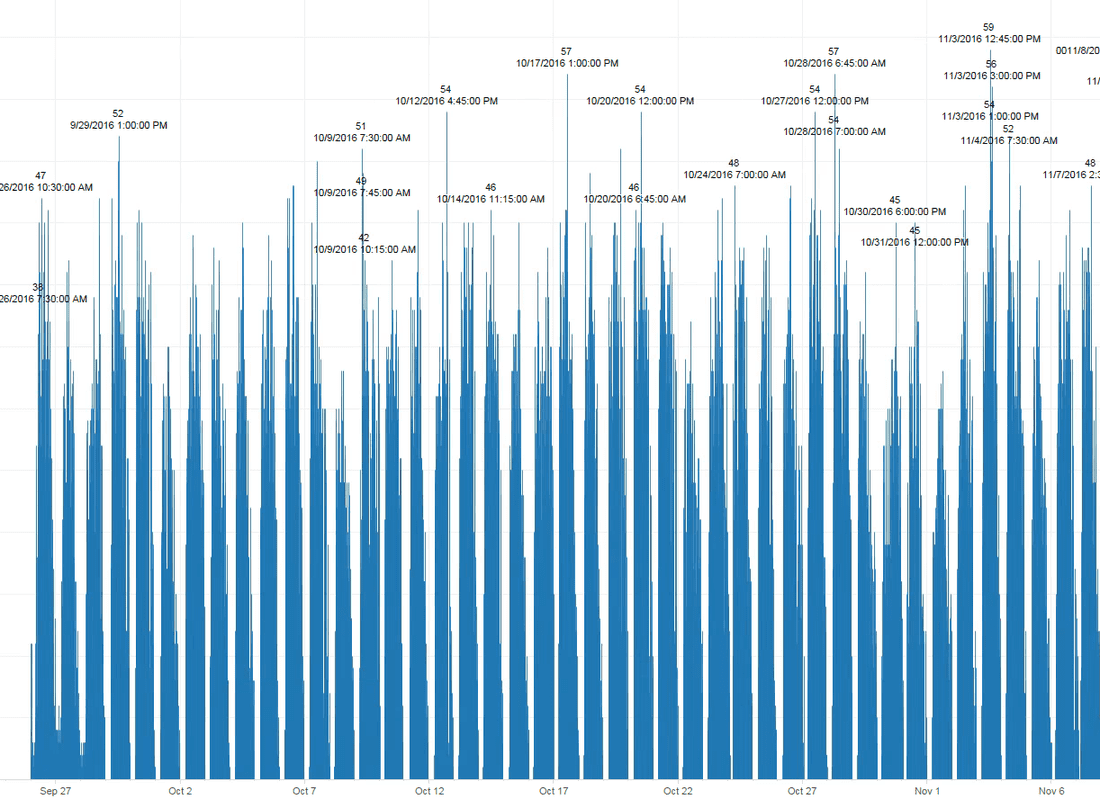

By analyzing your unstructured data, in particular your firewall logs, you can determine which websites are being accessed by multiple employees. You can then visit those websites to determine if they might indicate 3rd party relationships. For example, if the websites are providing a service and require a user id and password then that might be an indicator of a 3rd party relationship. This can be accomplished with the following Splunk command:

index=“<name of firewall index>” <domain name field>=“www.*” [inputlookup <name of lookup file> | fields <employee identifier> as user] | stats dc(user) as unique by <domain name field> | where unique > 2

We can break this command into several parts:

index=“<name of firewall index>” — This will access the index that contains your firewall logs. The name of the index may be unique to your organization and a discussion with your IT department can help to identify it.

<domain/host name field> = “www.*” — You will need to identify the field that contains your domain name. Again, this will likely be unique to your environment. This part of the command will identify the websites you’re interested in.

[inputlookup <name of lookup file> | fields <employee identifier> as user] - You will need to create a lookup (.csv) file that contains the identifier of the employees you want to review as it appears in your logs. This will likely be a list of employee IDs. This part of the command will use your .csv lookup file to filter the search to just the set of employees your focused on (for example, a particular business unit).

stats dc(user) as unique by <domain name field> - Here you will use your domain name field and the employee identifier to determine how many unique employees are accessing each website. The resulting field is named as ‘unique’.

where unique>2 - This will limit the results to only those websites where at least two different employees accessed the site. The assumption here is that websites representing a 3rd party relationships would likely be accessed by multiple employees at your organization.

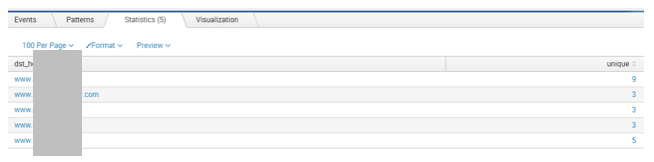

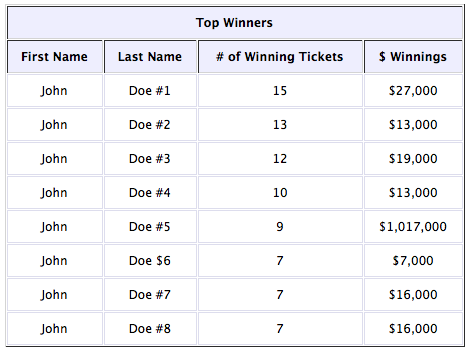

The results of this search will appear similar to the table shown below.

Surveying employees and asking them to identify 3rd party relationships is one approach but chances are it may not give you a complete picture. An independent approach to identification is a good way to complement taking surveys.

By analyzing your unstructured data, in particular your firewall logs, you can determine which websites are being accessed by multiple employees. You can then visit those websites to determine if they might indicate 3rd party relationships. For example, if the websites are providing a service and require a user id and password then that might be an indicator of a 3rd party relationship. This can be accomplished with the following Splunk command:

index=“<name of firewall index>” <domain name field>=“www.*” [inputlookup <name of lookup file> | fields <employee identifier> as user] | stats dc(user) as unique by <domain name field> | where unique > 2

We can break this command into several parts:

index=“<name of firewall index>” — This will access the index that contains your firewall logs. The name of the index may be unique to your organization and a discussion with your IT department can help to identify it.

<domain/host name field> = “www.*” — You will need to identify the field that contains your domain name. Again, this will likely be unique to your environment. This part of the command will identify the websites you’re interested in.

[inputlookup <name of lookup file> | fields <employee identifier> as user] - You will need to create a lookup (.csv) file that contains the identifier of the employees you want to review as it appears in your logs. This will likely be a list of employee IDs. This part of the command will use your .csv lookup file to filter the search to just the set of employees your focused on (for example, a particular business unit).

stats dc(user) as unique by <domain name field> - Here you will use your domain name field and the employee identifier to determine how many unique employees are accessing each website. The resulting field is named as ‘unique’.

where unique>2 - This will limit the results to only those websites where at least two different employees accessed the site. The assumption here is that websites representing a 3rd party relationships would likely be accessed by multiple employees at your organization.

The results of this search will appear similar to the table shown below.

Good luck with your Splunk searches!

RSS Feed

RSS Feed